So you’ve been through part 2 : Scaling the Monolith, and you’re still thinking microservices might be the right answer? OK, Let’s talk it out…

Part 3 - Moving to Microservices

There are some compelling reasons to move to microservices, but also some serious drawbacks. Let’s kick this off by making some statements about when the move to services makes sense.

Some reasons to consider Microservices:

- Separate teams in your organization manage separate features (services).

Microservices are often aligned with the organizational structure of your business, allowing a team to own its entire service. If an individual contributor has to implement a single user-story (in the “agile” sense) across several services, they now need to coordinate their own changes potentially across code repos, across projects, across deployments, which is not conducive to a rapid development pace. If your team has more microservices than team members, you may be better off combining the services into a single manageable entity.

- You need to improve Scale, not necessarily Performance.

Where “scale” is the max system load, and “performance” is the average response time when not under heavy load. Microservices will almost always be slower if timing an individual request/response through the system, but will usually handle a higher peak load without failures, due to having more servers in the system to spread load across, and being able to leave messages in a queue until system resources free up to process them.

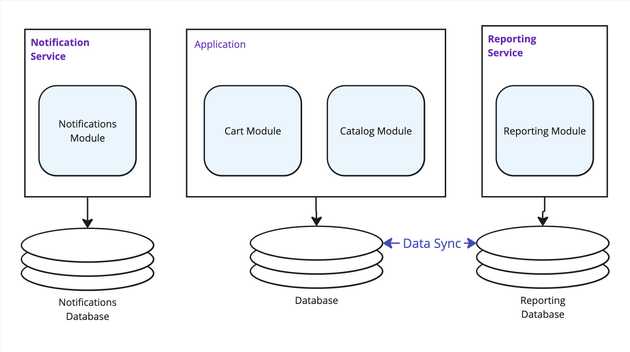

There is sometimes a statement thrown in related to microservices having their own specifically designed, highly tuned data store specific to its functionality. In the example of something like a Reporting service, this might mean that there is a separate Reporting DB that has all the data pre-denormalized and data queries often just pull back 1 record with all the data it needs, avoiding table joins. The thing is, there is nothing about a Microservice that facilitates this. A monolith can have more than one data store, so this same performance tuning could be done in a monolith. However it relates to the point above; if a separate team within the organization is going to be responsible for that highly performance tuned data schema, then perhaps they should also own their own microservice around it, so it comes back to an organizational decision over a performance one.

- Isolate non-critical or error-prone functionality (fault-isolation).

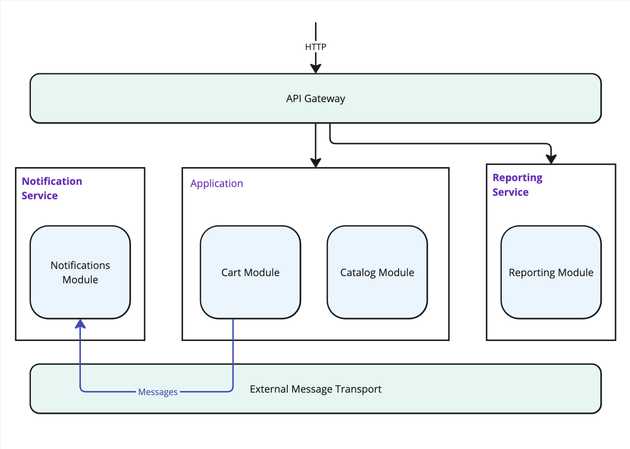

If you have problematic portions of your application that experience frequent outages, but aren’t critical to be immediately processed, then a microservice makes sense. The notification/email sender is often a good example of this. It is usually a feature that does not need be be immediate (if a user receives an email an hour after an action, it’s usually not a critical problem) and with a reliance on an external mail server, it could experience outages from a 3rd party main service. If it was kept as part of the monolith and in the same process as the rest of the application, there is a risk that a crash could take down other business-critical system components.

- Isolate long-running or CPU intensive operations.

Reporting features are often a good example of this. Report data often needs to join many DB tables and calculate a large amount of data. Image a reporting system where a user wants to see aggregated data for a 10-year period, which spans several million records. In a monolith environment, if the query takes 30-seconds or more, it will be tying up system resources and a spot in the request queue that are also needed for other critical business functions. Moving this off to its own isolated service can be beneficial (however as we also saw in Part 2, there are ways to achieve this with a monolith as well).

Challenges you will likely face:

- Interdependent deployments.

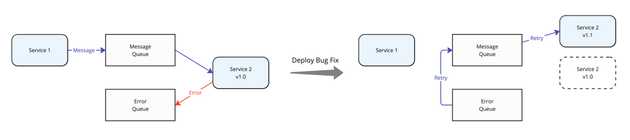

Services and the contracts between them will change. When one service changes, now teams need to coordinate the change across the deployment of multiple services. The “we should plan to deploy these 5 services at the same time” dance will often result in some failed messages between services, as they are in various states of updating. You may consider using API versioning internally between the services, and when a contract changes, the changing service should retain the previous version, allowing the already-deployed dependent services to continue to call it to receive the unchanged previous functionality. Then when the next dependent service is ready to update, it cna use the new API version of the service it has a dependency on. This should allow a seamless staggered deploy of services without one failing. Alternatively, if using message queue based communication between services, you could simply allow processing to fail for changed message contracts. Errored messages would end up in an error or dead-letter queue, and once the dependent service is also updated and deployed, the previously error’d messages can be re-queued.

- Debugging and Logging difficulties.

Triaging production issues becomes much more difficult when crossing service boundaries. Depending on your server infrastructure, the timestamps from logs on different servers may also be slightly skewed from each other, meaning log messages might not even be in quite the right order. Implementing good observability and traceability up front will help with this issue.

- Local development and testing difficulties.

It may no longer be feasible for a dev to run the entire suite of application services locally to try to reproduce a production issue. Integration testing of a single service can also be challenging because assumptions need to be made about the contract between other services and the data passed. Someone needs to own the contract and test data, and keep it updated when services change.

- Data syncing.

Microservices often end up using their own DB, which can then be specific to its needs. This results in the need for data duplication across services. This will usually need to be custom-built, and have measures for dealing with data being out of sync, or the need to entirely rebuild a database. DB backups also can get in an odd state, if one service’s DB fails and it restored from a day-old backup, its data will now also be a day out of sync with the other services. Often some kind of nightly data-sync scheduled process may be run as a scheduled task to periodically ensure data is in sync. Event-sourcing is also often discussed, but is difficult to transition an already-built application to.

- Request Idempotency and Out of Order requests.

Eventually, a service will be called multiple times for the same message or receive messages out of order, either due to the “at least once” delivery guarantee of a message bus, or because somewhere in a chain of service calls, an error occurred and triggered a retry. Every service call should be idempotent. You may need to consider this when choosing what to pass in a message or request.

Pulling apart the Monolith

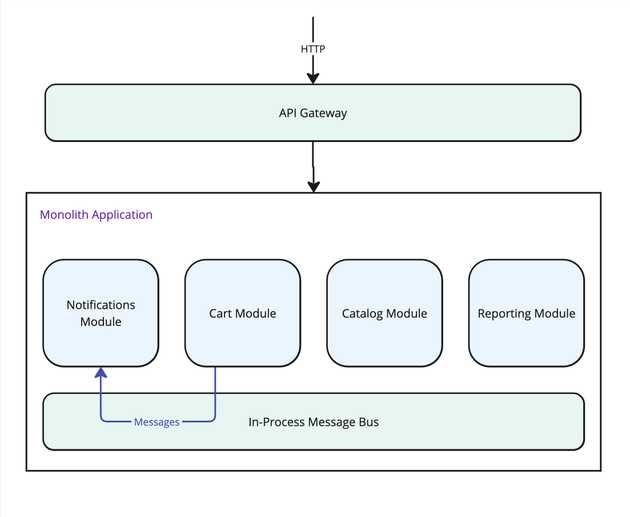

This is where the effort we put into our ‘feature slices’ and decoupled communication in Part 1 will hopefully start to pay off.

If we did a good job, the lines where it makes sense to pull off a microservice should already be defined in the form of one or more isolated features, that are already decoupled. Selecting what to move to its own service should follow the guidance above;

- What will have its own team supporting it (organizational considerations)?

- What critical vs non-critical operations can be separated (fault isolation)?

- What needs to scale independently?

Splitting code

From our example in Part 1, we pre-built our features with the thought of separating them at this point, so it should be relatively easy to pull these off to their own services now.

Something that will likely need to change is the messaging infrastructure. If we had cross-feature communication set up with an in-memory queue or mediator pattern, we will now need to introduce a second external message bus. This change will be dependent on your chosen messaging tooling.

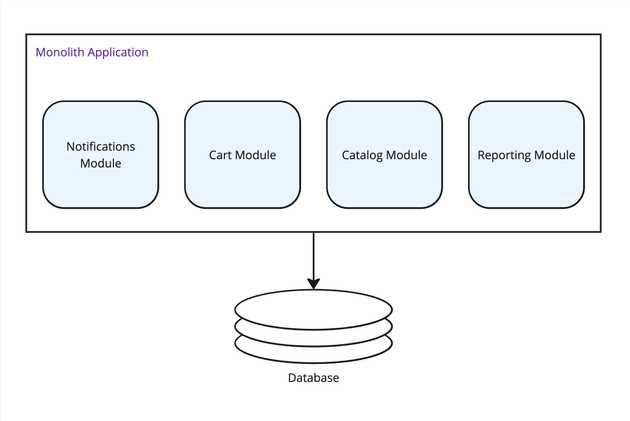

Splitting data

As features split, it’s likely the data will make sense to be split as well, though extra work will need to go into making sure it stays in sync across services.

First instinct is usually to retain a “single source of truth” for each bit of data, letting one service “own” all the data, and all other services query it. That can be a bit of an anti-pattern though. If services now have to constantly query each other to aggregate chunks of data, you are introducing more network latency, and sources of failure. If one service crashes or goes offline, it could now prevent another service from working. Also if many services are querying 1 service for data continually, it could overload that query service, causing the rest of the services to not scale as expected.

Typically you will start duplicating data to each service that needs it, and let the team that supports that service tune the DB technology and schema to best fit that service. You won’t usually have a “single source of truth” for data any more.

This data split ends up being a bit chunk of work up front to get right, but without it, you could end up in a situation later where services get data out of sync with no easy was to reconcile the differences.

For read-only data, you could also consider a cache to hold frequent reads to limit the need to query another service, or a shared read-replica copy of the data, as described in Part 2.

Cross Service Communication

HTTP? RPC? Messaging? Well, it depends.

As a general recommendation, if your service calls can be asynchronous and not require a response, then Messaging / Events should be used. They tend to be the most reliable, durable, and manageable. When there are errors, you get error queues and retry logic.

It would be ideal if all service to service calls did not need a response, but when you need a response, a synchronous HTTP or RPC request/response could be used.

Leveraging Messaging Error / Dead-Letter Queues

Above I pointed out that message-based communication is preferable for async commands and cited retry logic as a reason. I hear you saying “but gRPC has retries built into the client library, and HTTP retry isn’t hard to implement!” This is true, but…

With most HTTP or gRPC retry logic, the client will re-attempt the same message in a loop, The bonus feature of an error queue for messages is that those messages don’t have to be retried right now. You can have delayed retries! Why does that matter?

Image a scenario where your service has a bug (I know, it’s a stretch, but bear with me…) that causes it to always fail for a particular kind of message. Perhaps there is always an error when a message has null set of a property for example, but that wasn’t in your set of test messages for hte service, so wasn’t causing an issue until production. These messages fail because of the service logic, not a networking or infrastructure outage. This means no level of immediate retries will result in successfully processing these messages. With normal HTTP client retry, the client will likely just return an error when the called service responds with an error. With messaging, the problematic messages will end up in the error queue. This gives you time to deploy a code fix that now handles these messages appropriately, and now you can re-queue all the failed messages to run against the fixed code!

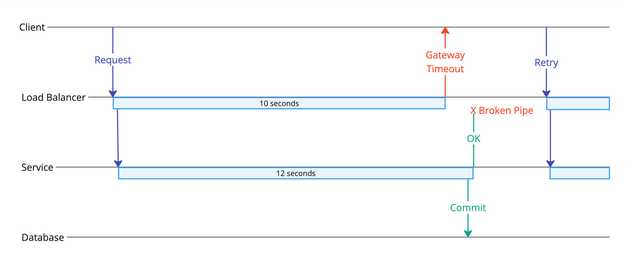

HTTP Load Balancer details

You should be aware of failure cases with HTTP, especially chained calls through services, than can be a point of frustration, and that is with load balancer Gateway Timeouts. Remember that your services are clustered and probably behind a load balancer, load balancers are really just another HTTP server in the chain. Most LBs have some default timeout (for example AWS ELB default timeout is 60s, but could be set lower). If the chain of HTTP calls between services takes longer than this timeout, the LB will return a Gateway Timeout even though the application will still be processing the request, and could finnish successfully and commit data. This could be handled through careful retry logic and idempotent request handling, but is usually only really a problem for data-write operations, which is why I don’t generally like to use HTTP/synchronous requests for Write/COmmand operations, but is fine for Read/Query operations.

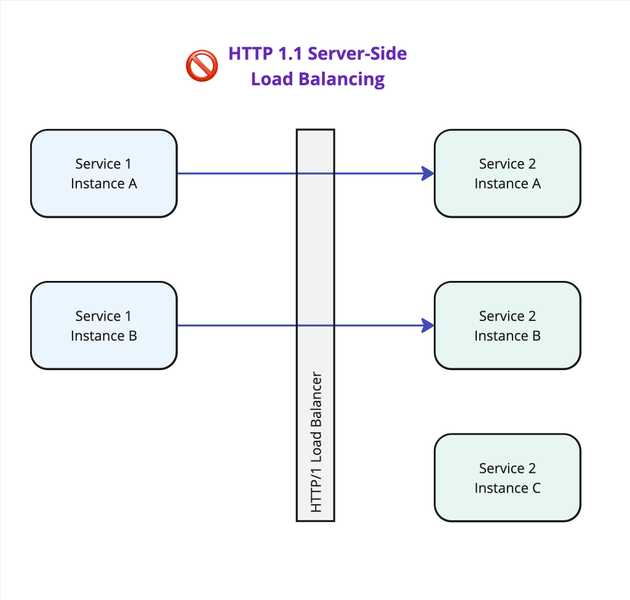

gRPC Load Balancer details

If you chose to leverage gRPC, pat special attention to its behavior when passing through a Load Balancer. Since gRPC uses a persistent connection and sends all requests over it, with an LB that only supports HTTP/1.1, a connection will be made once through the LB then used for all requests between services, resulting in a 1-to-1 relationship between service instances.

In this example:

- gRPC from

Service 1 Instance Awould open and keep open a connection toService 2 Instance A. - Likewise

Service 1 Instance Bconnects toService 2 Instance B. - Then all requests from

Service 1 Instance Aget processed byService 2 Instance A. - All requests from

Service 1 Instance Bget processed byService 2 Instance B. - No requests are processed by

Service 2 Instance C.

This is likely not what you intended.

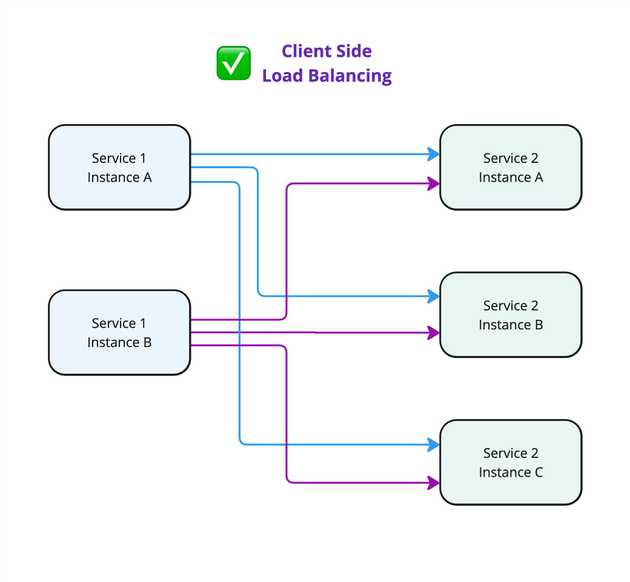

You will want to use either “Client-Side” load balancing, which takes a list of all server IP addresses and balances between them from the client (with no server-side load balancer needed). This is implemented by the Google gRPC client.

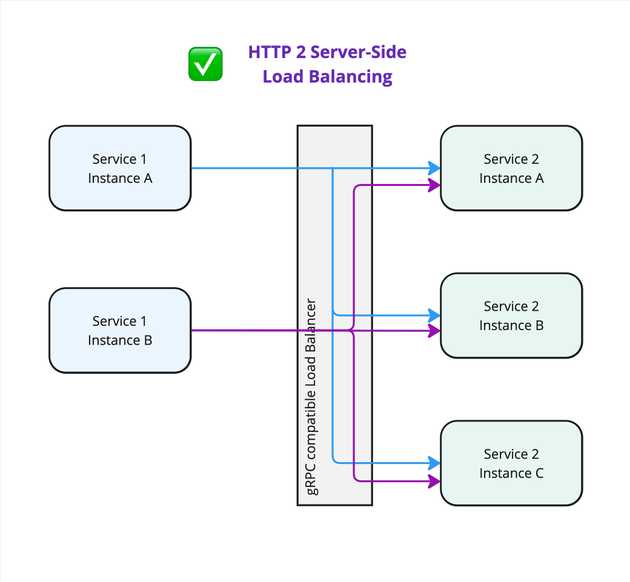

Alternatively, you can use a special HTTP/2 or gRPC compatible LB on the server side. Then the client would open and maintain a connection to the LB, and the LB would then open connections to the servers and balance requests. This is only implemented by some proxies, for example Envoy.

Key Takeaways:

- The move to Microservices should be somewhat dependent on the organization and team structure.

- Considerations should include how data may need to be replicated and kept in sync between services.

- Microservices are best suited for highly independent features that do not require many calls to other services.

- Microservices will usually improve overall peak load of a system, possibly at the detriment of individual request latency.

- Consider Message-based communication for asynchronous Commands, and TCP-based communication for synchronous Queries.

- These are all generalities. The real implementation details will be specific to your organization and business needs.