Welcome back to this series on architecting applications and systems with scaling in mind.

In Part 2 of this series, we are going to take the sliced/modular monolith application that we talked about in part 1, and start looking at different ways to deploy the system to improve performance at scale.

Part 2 - Scaling the Monolith

We have our monolithic application that provides some API endpoints and interacts with a database. Now we want to look at ways we can deploy the system in a production environment and how it affects the ability to perform under load.

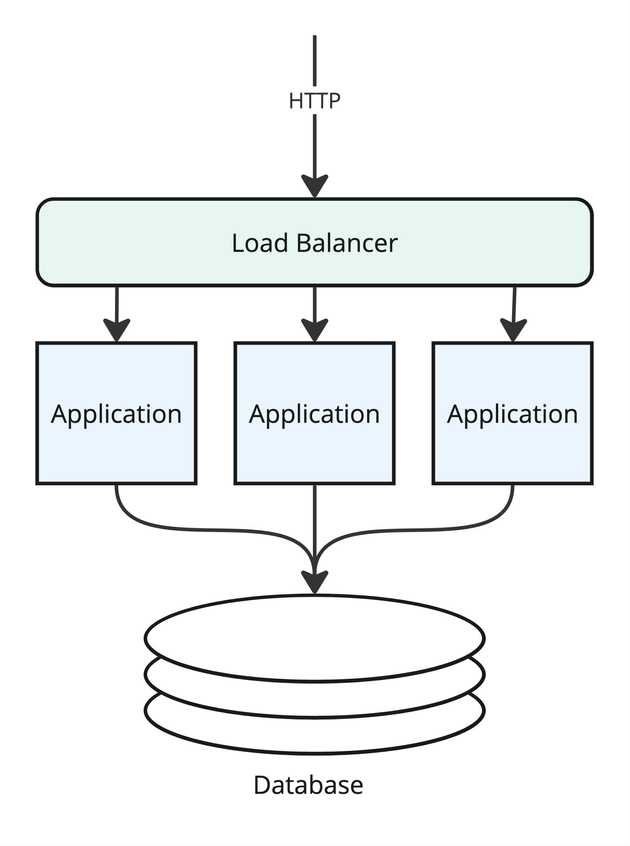

Starting with a Load Balanced Cluster

We’ll start with the one everyone knows - the load-balanced cluster. We start up multiple instances of our application and have a load balancer handle distributing load.

This allows us to scale horizontally by adding more servers to our cluster to accommodate load. You could even use dynamic scaling to automatically add or remove servers from the cluster to scale with load.

This Improves:

- Scaling when CPU and/or Memory becomes the bottleneck.

- Provides failover for a crashed server instance.

Shortcomings:

- Only as good as the load balancer. Depending on the load balancer’s method of distributing load, can still experience processing delays even when all servers are not saturated.

- More likely to overload the database, since many servers are now querying it.

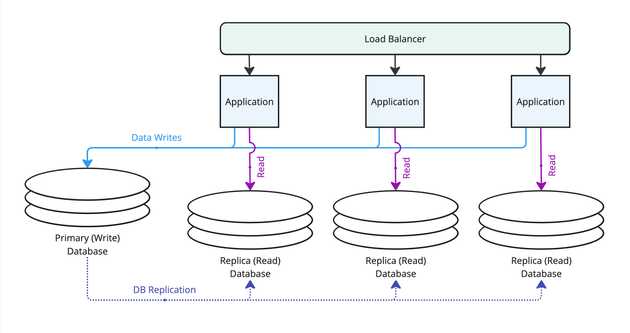

Adding a Database Read-Replica

As we add application instances to our cluster to increase scale, we will reach a point where our database becomes the bottleneck. When you find your database instance to be under too heavy a load, this is a good time to introduce a read replica.

This becomes a bit dependant on your specific database technology, but for sake of this blog series, I’ll make some assumptions around PostgreSQL since it provides a nice built-in replication scheme, but most SQL databases are likely to behave the same. Be aware that replication delays and locking behavior may affect the overall scaling outcome, so you should do some research into whatever database you choose.

Back in Part 1, I mentioned that when architecting our application and splitting out features into slices, it would be a good idea to keep “CQRS” in mind, at least in terms of what operations really need to “Write” data, versus only needing to “Read” data. If we did that split well, then you will notice that CQRS plays nicely with having separate database connections for Write and Read databases. Our read-only Queries can now be shifted to the read replica database connection.

Related to Microservice Recommendations of Database-per-Service

If you have read up on microservices before reading this series, you may have read about the general recommendations around services having their own databases, which helps spread out the load, and make individual parts of the system able to be tuned for performance. By using simple database replication, we are gaining part of that benefit; the ability to spread the load of heavy data reads. This approach minimizes the overall work we need to put into architecting the system for scaling, because we don’t have to manage entire separate databases, don’t have to custom design schemas per service, and most importantly can rely on the built-in database replication features to handle the eventual-consistency of data. In a “Microservice Purist” world, you would have to write and maintain your own data replication and syncing tooling, which might be overkill for what we actually need.

Eventual Consistency

Note that from this point on in our scaling adventure, we need to worry about “eventual consistency”. Replication is not instant, so reading data that was written may be out of date. You can read up on this more from other sources, but this is something that should be designed into your entire system from the product-design, UI/UX design phases.

For read queries that need up to date data, you can continue to read from the Primary (write) database, since that database instance will have all the standard transactions and locking schemes you expect.

This Improves:

- Scaling the database when our system performance becomes database bound.

- Works well for read-heavy applications.

- Depending on the database you use, leverages what is built into the database for data syncing without having to build our own.

- Mostly transparent to the application. Changing the database it uses should be as simple as updating the query string in the app config.

Shortcomings:

- We now move into the world of “eventual consistency”.

- Still a single write database, so write-heavy applications may not benefit.

Adding a Read-Replica per Server

If our application is very data-read heavy or performs a lot of complex queries, we can take this one step further and set up a database read replica per app server in the cluster. Ideally this is something that would be set up by your IaC code and set up automatically when you change the size of the cluster.

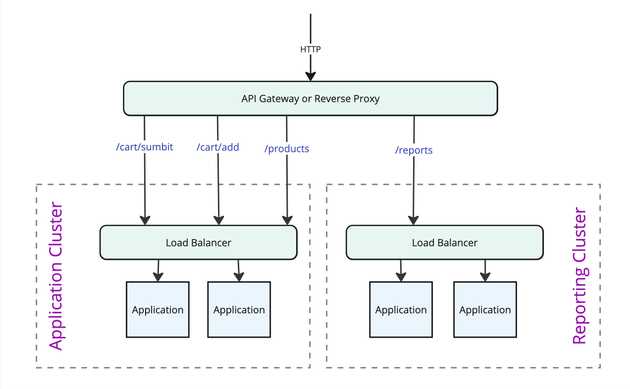

Adding an API Gateway to spread request types

This one simple scaling trick that microservices don’t want you to know about!

Let’s say we have some scaling issues around individual parts of our application, that are either CPU or database-read intensive. A “reporting” service may be a good example of this, where building a complex report with numerous SQL joins could take 30 seconds or more.

Through our API contract with the outside world, we can segment these by URL. From our monolith in part 1, we have a /reports API path defined. Let’s assume that /reports keeps bogging down our cluster due to its heavy CPU and database use, but is a service that is relatively infrequently used. But we don’t want it clogging up the processing pipeline of the rest of our cluster.

This is the point where a lot of people will run toward microservices and try to pull a “Reporting Service” off from the monolith, but we might not need that yet. Try this approach first.

Let’s add an “API Gateway”, or even just a “Reverse Proxy” (for example, Nginx would suffice) that would have the ability to route traffic based on the HTTP request paths. We can use this to isolate the “expensive” /reports requests, and route them to its own special cluster that is dedicated to reporting.

Note that all the application instances across the clusters are the same monolithic app code just with some infrastructure and config tweaks to isolate them.

We have effectively shifted our computationally expensive reporting operations to its own cluster, with its own (read-only) database instance, allowing us to scale a small portion of our app functionality, just like a “microservice” but without the complication of breaking up our code!

This Improves:

- Scaling the app when we have some resource or database bound operations that would otherwise clog up the application request queue.

- Avoids code rewrite / rework.

Shortcomings:

- Still a single write database, so write-heavy features may not benefit.

Key Takeaways:

- Monolithic applications can be scalable.

- Leverage database replication to scale read-intensive portions of the app.

- Leverage an API Gateway or Reverse Proxy to split load based on URLs.

Upcoming in Part 3: Moving to Microservices

At this point you should have been able to come up with a plan to effectively scale your monolithic application. However if performance is still a problem, there may be compelling reasons to start pulling functionality off into independent microservices. In part 3, we will look into some of these reasons and decide of a microservice approach is a good path for you.